last summer, I wrote about a change I wanted to be part off

quick summary:

but a change does not happen overnight

Let’s Start Here.

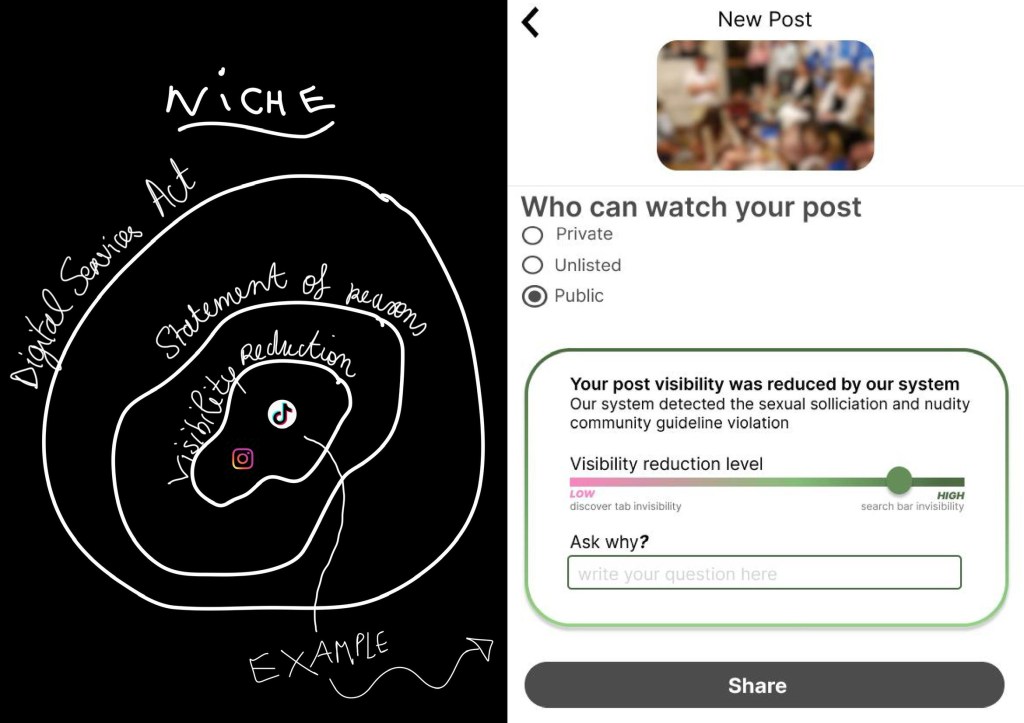

a pilot study focused on the Digital Services act, DSA for short (available here) – that came to light thanks to

Philipp Lorenz-Spreen, Lorenzo Porcaro, Caroline Are, Other Nature Feminist queer & vegan s3xshop in Berlin, Hetero cringe, Fuck Yeah Sexshopkollektiv…1

niche

as the DSA could lead to a zillioooooooooooooooooooooooooooooooooooooon user research questions, I carved myself a little niche – the statement of reasons for users subject to visibility reduction on TikTok and Instagram

?!!!if you are not familiar with the DSA, this niche is gibberish to you!!!?

the statement of reasons for users subject to visibility reduction on TikTok and Instagram = notification users get when they are shadow banned

?!!!maybe shadow banning is also gibberish to you!!!?

the statement of reasons for users subject to visibility reduction on TikTok and Instagram = notification users get when their content does not appear in people’s feeds because it’s identified as problematic2

even though this is really specific

it is also an ecological niche (in biology, a niche refers to the functional role an organism plays within an ecosystem) – it plays the role of content moderation decision transparency and contestability in the online regulation ecosystem

and this makes it a good niche

but carving myself a good niche wasn’t enough, I needed to actually do user research…

user research & :(failure(:

so I asked one question – which notification user interface could reduce user’s feeling of powerlessness??????????????????????????????????????????

my idea was to test notifications user interfaces that differ in 3 ways

- the timing of the notification (before, during, after posting)

- the presence of a visibility reduction scale

- the options to ask why

find all the notifications UI here

:[failure(:

after designing 18 different notifications, I reached out to people online and irl affected by visibility reduction

19 people participated in the online survey

in the end, I do not have an answer to my user research question, none of the notifications UI reduced the feeling of powerlessness

I see two areas of improvements

- the notification design – Participants did not care about the timing, the scale, or the option to ask why. Instead, they asked for less brief/vague explanations and, more notification unpacking which element in their post or profile caused the visibility reduction decision. In the pilot, I focused on “Adult Nudity and Sexual Activity Community Standard”, but to test this kind of notification, I would need to be more specific e.g. focus on one shadow banning scenario, such as transgenders accounts victim of over flagging.

- measurements – For a pilot, it’s better to collect as much qualitative data as possible instead of being obsessed with quantitative measures.

noW Who?!

despite this failure, the pilot showed the DSA could provoke user research and if done well, improve transparency and contestation online experiences

then, who could do this user research?

H1 user researchers working for platforms

right now UI/UX professionals could be working on these notifications to comply with the DSA

but they could be stuck in the middle of internal conflicts between public policy, trust and safety, global operation teams3 – in addition they could be under the impression that their work is a “ceremonial prop” (Christin et al. 2024)

however as Angèle Christin, Michael S. Bernstein, Jeffrey T. Hancock, Chenyan Jia, Marijn N. Mado, Jeanne L. Tsai, and Chunchen Xu discuss, social media platforms are not monoliths

and on June 27, Meta Oversight board, stated that it aims to “focus on issues that matter to users – such as demoted content” (“demoted content” could refer to shadow banning)4

maybe Meta Oversight board impulse could improve the UI/UX faster than the DSA enforcement? not sure, but let’s be optimistic!

H2 researchers and designers part of the Commission DG Connect investigative efforts

regulators have to check wether platforms fulfill the rules

as I am focusing on Very Large Online Platforms here, the authority in charge of the DSA enforcement is the EU Commission5

the Commission could send a request for information informed by findings published in an academic journal, and/or UI designs developed by an independent design lab (。•̀ᴗ-)✧6

right now the EU Commision DG Connect is building a network of experts, but how can we be sure the UI/UX ressources are here? we currently don’t know because the oversight structure is taking shape7

H3 you tell me margaux.vitre(at)gmail.com

Credit to the goat, Emilie Lor/@_kknomos for the slutty sea slug inspired from jojo & the font

- this is a non-exhaustive list of the people and collectives who made the pilot study possible and to whom I owe my eternal gratitude ↩︎

- this is a simplification of shadow banning please read this if you are interested ↩︎

- Useful article from Meta Transparency Center to understand which team is in charge of content moderation https://transparency.meta.com/fr-fr/enforcement/detecting-violations/reviewing-high-visibility-content-accurately/ ↩︎

- https://www.oversightboard.com/news/2023-annual-report-shows-boards-impact-on-meta/ ↩︎

- Here the DG Connect publishes updates concerning Very Large Online Platforms and Search engines https://digital-strategy.ec.europa.eu/en/policies/list-designated-vlops-and-vloses#ecl-inpage-lqfbhgbn ↩︎

- what if this lab becomes real one day? ↩︎

- great article by Dr Julian Jaursch on what’s to come https://www.interface-eu.org/publications/a-look-ahead-at-eu-digital-regulation-oversight-structures-in-the-member-states ↩︎

Leave a comment